metadata

license: cc-by-nc-4.0

model-index:

- name: mixtral_7bx4_moe

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 65.27

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 85.28

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 62.84

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 59.85

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 77.66

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 62.09

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=cloudyu/mixtral_7bx4_moe

name: Open LLM Leaderboard

I don't know why so many downloads about this model. Please share your cases, thanks.

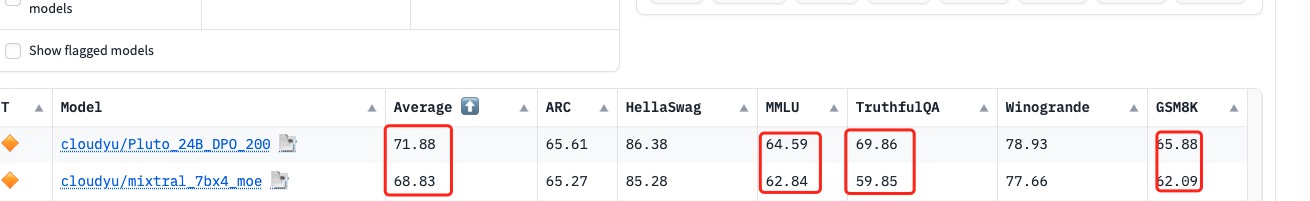

Now this model is improved by DPO to cloudyu/Pluto_24B_DPO_200

Mixtral MOE 4x7B

MOE the following models by mergekit:

- Q-bert/MetaMath-Cybertron-Starling

- mistralai/Mistral-7B-Instruct-v0.2

- teknium/Mistral-Trismegistus-7B

- meta-math/MetaMath-Mistral-7B

- openchat/openchat-3.5-1210

Metrics

- Average : 68.85

- ARC:65.36

- HellaSwag:85.23

- more details: https://huggingface.co/datasets/open-llm-leaderboard/results/blob/main/cloudyu/Mixtral_7Bx4_MOE_24B/results_2023-12-23T18-05-51.243288.json

gpu code example

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import math

## v2 models

model_path = "cloudyu/Mixtral_7Bx4_MOE_24B"

tokenizer = AutoTokenizer.from_pretrained(model_path, use_default_system_prompt=False)

model = AutoModelForCausalLM.from_pretrained(

model_path, torch_dtype=torch.float32, device_map='auto',local_files_only=False, load_in_4bit=True

)

print(model)

prompt = input("please input prompt:")

while len(prompt) > 0:

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to("cuda")

generation_output = model.generate(

input_ids=input_ids, max_new_tokens=500,repetition_penalty=1.2

)

print(tokenizer.decode(generation_output[0]))

prompt = input("please input prompt:")

CPU example

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import math

## v2 models

model_path = "cloudyu/Mixtral_7Bx4_MOE_24B"

tokenizer = AutoTokenizer.from_pretrained(model_path, use_default_system_prompt=False)

model = AutoModelForCausalLM.from_pretrained(

model_path, torch_dtype=torch.float32, device_map='cpu',local_files_only=False

)

print(model)

prompt = input("please input prompt:")

while len(prompt) > 0:

input_ids = tokenizer(prompt, return_tensors="pt").input_ids

generation_output = model.generate(

input_ids=input_ids, max_new_tokens=500,repetition_penalty=1.2

)

print(tokenizer.decode(generation_output[0]))

prompt = input("please input prompt:")

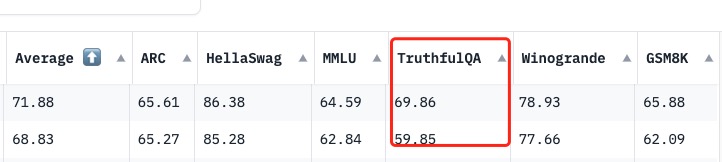

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 68.83 |

| AI2 Reasoning Challenge (25-Shot) | 65.27 |

| HellaSwag (10-Shot) | 85.28 |

| MMLU (5-Shot) | 62.84 |

| TruthfulQA (0-shot) | 59.85 |

| Winogrande (5-shot) | 77.66 |

| GSM8k (5-shot) | 62.09 |