Commit

•

210f9ee

1

Parent(s):

49b97d3

Upload 26 files

Browse files- .gitattributes +1 -0

- README.md +373 -0

- controlnet_utils.py +40 -0

- images/bag.png +0 -0

- images/bag_scribble.png +0 -0

- images/bag_scribble_out.png +0 -0

- images/bird.png +3 -0

- images/bird_canny.png +0 -0

- images/bird_canny_out.png +0 -0

- images/chef_pose_out.png +0 -0

- images/house.png +0 -0

- images/house_seg.png +0 -0

- images/house_seg_out.png +0 -0

- images/man.png +0 -0

- images/man_hed.png +0 -0

- images/man_hed_out.png +0 -0

- images/openpose.png +0 -0

- images/pose.png +0 -0

- images/room.png +0 -0

- images/room_mlsd.png +0 -0

- images/room_mlsd_out.png +0 -0

- images/stormtrooper.png +0 -0

- images/stormtrooper_depth.png +0 -0

- images/stormtrooper_depth_out.png +0 -0

- images/toy.png +0 -0

- images/toy_normal.png +0 -0

- images/toy_normal_out.png +0 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

images/bird.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,376 @@

|

|

| 1 |

---

|

| 2 |

license: openrail

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: openrail

|

| 3 |

---

|

| 4 |

+

|

| 5 |

+

# Controlnet

|

| 6 |

+

|

| 7 |

+

Controlnet is an auxiliary model which augments pre-trained diffusion models with an additional conditioning.

|

| 8 |

+

|

| 9 |

+

Controlnet comes with multiple auxiliary models, each which allows a different type of conditioning

|

| 10 |

+

|

| 11 |

+

Controlnet's auxiliary models are trained with stable diffusion 1.5. Experimentally, the auxiliary models can be used with other diffusion models such as dreamboothed stable diffusion.

|

| 12 |

+

|

| 13 |

+

The auxiliary conditioning is passed directly to the diffusers pipeline. If you want to process an image to create the auxiliary conditioning, external dependencies are required.

|

| 14 |

+

|

| 15 |

+

Some of the additional conditionings can be extracted from images via additional models. We extracted these

|

| 16 |

+

additional models from the original controlnet repo into a separate package that can be found on [github](https://github.com/patrickvonplaten/human_pose.git).

|

| 17 |

+

|

| 18 |

+

## Canny edge detection

|

| 19 |

+

|

| 20 |

+

Install opencv

|

| 21 |

+

|

| 22 |

+

```sh

|

| 23 |

+

$ pip install opencv-contrib-python

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

```python

|

| 27 |

+

import cv2

|

| 28 |

+

from PIL import Image

|

| 29 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 30 |

+

import torch

|

| 31 |

+

import numpy as np

|

| 32 |

+

|

| 33 |

+

image = Image.open('images/bird.png')

|

| 34 |

+

image = np.array(image)

|

| 35 |

+

|

| 36 |

+

low_threshold = 100

|

| 37 |

+

high_threshold = 200

|

| 38 |

+

|

| 39 |

+

image = cv2.Canny(image, low_threshold, high_threshold)

|

| 40 |

+

image = image[:, :, None]

|

| 41 |

+

image = np.concatenate([image, image, image], axis=2)

|

| 42 |

+

image = Image.fromarray(image)

|

| 43 |

+

|

| 44 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 45 |

+

"fusing/stable-diffusion-v1-5-controlnet-canny",

|

| 46 |

+

)

|

| 47 |

+

|

| 48 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 49 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 50 |

+

)

|

| 51 |

+

pipe.to('cuda')

|

| 52 |

+

|

| 53 |

+

image = pipe("bird", image).images[0]

|

| 54 |

+

|

| 55 |

+

image.save('images/bird_canny_out.png')

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

## M-LSD Straight line detection

|

| 65 |

+

|

| 66 |

+

Install the additional controlnet models package.

|

| 67 |

+

|

| 68 |

+

```sh

|

| 69 |

+

$ pip install git+https://github.com/patrickvonplaten/human_pose.git

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

```py

|

| 73 |

+

from PIL import Image

|

| 74 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 75 |

+

import torch

|

| 76 |

+

from human_pose import MLSDdetector

|

| 77 |

+

|

| 78 |

+

mlsd = MLSDdetector.from_pretrained('lllyasviel/ControlNet')

|

| 79 |

+

|

| 80 |

+

image = Image.open('images/room.png')

|

| 81 |

+

|

| 82 |

+

image = mlsd(image)

|

| 83 |

+

|

| 84 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 85 |

+

"fusing/stable-diffusion-v1-5-controlnet-mlsd",

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 89 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 90 |

+

)

|

| 91 |

+

pipe.to('cuda')

|

| 92 |

+

|

| 93 |

+

image = pipe("room", image).images[0]

|

| 94 |

+

|

| 95 |

+

image.save('images/room_mlsd_out.png')

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

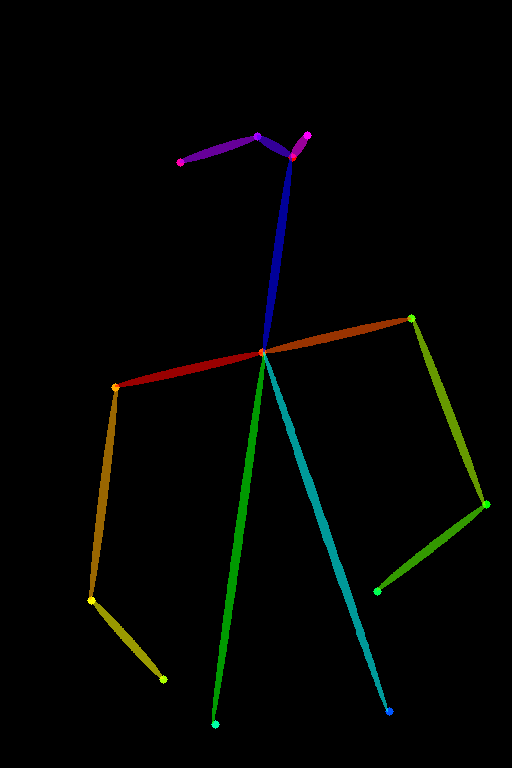

## Pose estimation

|

| 105 |

+

|

| 106 |

+

Install the additional controlnet models package.

|

| 107 |

+

|

| 108 |

+

```sh

|

| 109 |

+

$ pip install git+https://github.com/patrickvonplaten/human_pose.git

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

```py

|

| 113 |

+

from PIL import Image

|

| 114 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 115 |

+

import torch

|

| 116 |

+

from human_pose import OpenposeDetector

|

| 117 |

+

|

| 118 |

+

openpose = OpenposeDetector.from_pretrained('lllyasviel/ControlNet')

|

| 119 |

+

|

| 120 |

+

image = Image.open('images/pose.png')

|

| 121 |

+

|

| 122 |

+

image = openpose(image)

|

| 123 |

+

|

| 124 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 125 |

+

"fusing/stable-diffusion-v1-5-controlnet-openpose",

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 129 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 130 |

+

)

|

| 131 |

+

pipe.to('cuda')

|

| 132 |

+

|

| 133 |

+

image = pipe("chef in the kitchen", image).images[0]

|

| 134 |

+

|

| 135 |

+

image.save('images/chef_pose_out.png')

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

## Semantic Segmentation

|

| 145 |

+

|

| 146 |

+

Semantic segmentation relies on transformers. Transformers is a

|

| 147 |

+

dependency of diffusers for running controlnet, so you should

|

| 148 |

+

have it installed already.

|

| 149 |

+

|

| 150 |

+

```py

|

| 151 |

+

from transformers import AutoImageProcessor, UperNetForSemanticSegmentation

|

| 152 |

+

from PIL import Image

|

| 153 |

+

import numpy as np

|

| 154 |

+

from controlnet_utils import ade_palette

|

| 155 |

+

import torch

|

| 156 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 157 |

+

|

| 158 |

+

image_processor = AutoImageProcessor.from_pretrained("openmmlab/upernet-convnext-small")

|

| 159 |

+

image_segmentor = UperNetForSemanticSegmentation.from_pretrained("openmmlab/upernet-convnext-small")

|

| 160 |

+

|

| 161 |

+

image = Image.open("./images/house.png").convert('RGB')

|

| 162 |

+

|

| 163 |

+

pixel_values = image_processor(image, return_tensors="pt").pixel_values

|

| 164 |

+

|

| 165 |

+

with torch.no_grad():

|

| 166 |

+

outputs = image_segmentor(pixel_values)

|

| 167 |

+

|

| 168 |

+

seg = image_processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

|

| 169 |

+

|

| 170 |

+

color_seg = np.zeros((seg.shape[0], seg.shape[1], 3), dtype=np.uint8) # height, width, 3

|

| 171 |

+

|

| 172 |

+

palette = np.array(ade_palette())

|

| 173 |

+

|

| 174 |

+

for label, color in enumerate(palette):

|

| 175 |

+

color_seg[seg == label, :] = color

|

| 176 |

+

|

| 177 |

+

color_seg = color_seg.astype(np.uint8)

|

| 178 |

+

|

| 179 |

+

image = Image.fromarray(color_seg)

|

| 180 |

+

|

| 181 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 182 |

+

"fusing/stable-diffusion-v1-5-controlnet-seg",

|

| 183 |

+

)

|

| 184 |

+

|

| 185 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 186 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 187 |

+

)

|

| 188 |

+

pipe.to('cuda')

|

| 189 |

+

|

| 190 |

+

image = pipe("house", image).images[0]

|

| 191 |

+

|

| 192 |

+

image.save('./images/house_seg_out.png')

|

| 193 |

+

```

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

|

| 201 |

+

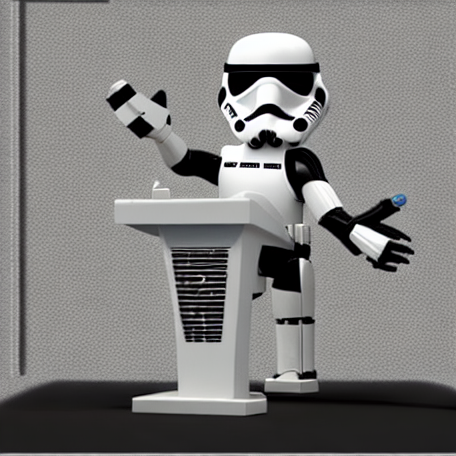

## Depth control

|

| 202 |

+

|

| 203 |

+

Depth control relies on transformers. Transformers is a dependency of diffusers for running controlnet, so

|

| 204 |

+

you should have it installed already.

|

| 205 |

+

|

| 206 |

+

```py

|

| 207 |

+

from transformers import pipeline

|

| 208 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 209 |

+

from PIL import Image

|

| 210 |

+

import numpy as np

|

| 211 |

+

|

| 212 |

+

depth_estimator = pipeline('depth-estimation')

|

| 213 |

+

|

| 214 |

+

image = Image.open('./images/stormtrooper.png')

|

| 215 |

+

image = depth_estimator(image)['depth']

|

| 216 |

+

image = np.array(image)

|

| 217 |

+

image = image[:, :, None]

|

| 218 |

+

image = np.concatenate([image, image, image], axis=2)

|

| 219 |

+

image = Image.fromarray(image)

|

| 220 |

+

|

| 221 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 222 |

+

"fusing/stable-diffusion-v1-5-controlnet-depth",

|

| 223 |

+

)

|

| 224 |

+

|

| 225 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 226 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 227 |

+

)

|

| 228 |

+

pipe.to('cuda')

|

| 229 |

+

|

| 230 |

+

image = pipe("Stormtrooper's lecture", image).images[0]

|

| 231 |

+

|

| 232 |

+

image.save('./images/stormtrooper_depth_out.png')

|

| 233 |

+

```

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

|

| 238 |

+

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

## Normal map

|

| 243 |

+

|

| 244 |

+

```py

|

| 245 |

+

from PIL import Image

|

| 246 |

+

from transformers import pipeline

|

| 247 |

+

import numpy as np

|

| 248 |

+

import cv2

|

| 249 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 250 |

+

|

| 251 |

+

image = Image.open("images/toy.png").convert("RGB")

|

| 252 |

+

|

| 253 |

+

depth_estimator = pipeline("depth-estimation", model ="Intel/dpt-hybrid-midas" )

|

| 254 |

+

|

| 255 |

+

image = depth_estimator(image)['predicted_depth'][0]

|

| 256 |

+

|

| 257 |

+

image = image.numpy()

|

| 258 |

+

|

| 259 |

+

image_depth = image.copy()

|

| 260 |

+

image_depth -= np.min(image_depth)

|

| 261 |

+

image_depth /= np.max(image_depth)

|

| 262 |

+

|

| 263 |

+

bg_threhold = 0.4

|

| 264 |

+

|

| 265 |

+

x = cv2.Sobel(image, cv2.CV_32F, 1, 0, ksize=3)

|

| 266 |

+

x[image_depth < bg_threhold] = 0

|

| 267 |

+

|

| 268 |

+

y = cv2.Sobel(image, cv2.CV_32F, 0, 1, ksize=3)

|

| 269 |

+

y[image_depth < bg_threhold] = 0

|

| 270 |

+

|

| 271 |

+

z = np.ones_like(x) * np.pi * 2.0

|

| 272 |

+

|

| 273 |

+

image = np.stack([x, y, z], axis=2)

|

| 274 |

+

image /= np.sum(image ** 2.0, axis=2, keepdims=True) ** 0.5

|

| 275 |

+

image = (image * 127.5 + 127.5).clip(0, 255).astype(np.uint8)

|

| 276 |

+

image = Image.fromarray(image)

|

| 277 |

+

|

| 278 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 279 |

+

"fusing/stable-diffusion-v1-5-controlnet-normal",

|

| 280 |

+

)

|

| 281 |

+

|

| 282 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 283 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 284 |

+

)

|

| 285 |

+

pipe.to('cuda')

|

| 286 |

+

|

| 287 |

+

image = pipe("cute toy", image).images[0]

|

| 288 |

+

|

| 289 |

+

image.save('images/toy_normal_out.png')

|

| 290 |

+

```

|

| 291 |

+

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

## Scribble

|

| 299 |

+

|

| 300 |

+

Install the additional controlnet models package.

|

| 301 |

+

|

| 302 |

+

```sh

|

| 303 |

+

$ pip install git+https://github.com/patrickvonplaten/human_pose.git

|

| 304 |

+

```

|

| 305 |

+

|

| 306 |

+

```py

|

| 307 |

+

from PIL import Image

|

| 308 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 309 |

+

import torch

|

| 310 |

+

from human_pose import HEDdetector

|

| 311 |

+

|

| 312 |

+

hed = HEDdetector.from_pretrained('lllyasviel/ControlNet')

|

| 313 |

+

|

| 314 |

+

image = Image.open('images/bag.png')

|

| 315 |

+

|

| 316 |

+

image = hed(image, scribble=True)

|

| 317 |

+

|

| 318 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 319 |

+

"fusing/stable-diffusion-v1-5-controlnet-scribble",

|

| 320 |

+

)

|

| 321 |

+

|

| 322 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 323 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 324 |

+

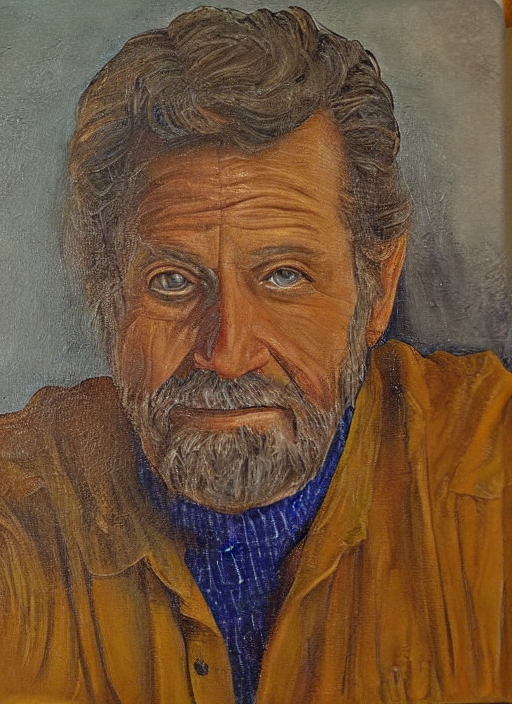

)

|

| 325 |

+

pipe.to('cuda')

|

| 326 |

+

|

| 327 |

+

image = pipe("bag", image).images[0]

|

| 328 |

+

|

| 329 |

+

image.save('images/bag_scribble_out.png')

|

| 330 |

+

```

|

| 331 |

+

|

| 332 |

+

|

| 333 |

+

|

| 334 |

+

|

| 335 |

+

|

| 336 |

+

|

| 337 |

+

|

| 338 |

+

## HED Boundary

|

| 339 |

+

|

| 340 |

+

Install the additional controlnet models package.

|

| 341 |

+

|

| 342 |

+

```sh

|

| 343 |

+

$ pip install git+https://github.com/patrickvonplaten/human_pose.git

|

| 344 |

+

```

|

| 345 |

+

|

| 346 |

+

```py

|

| 347 |

+

from PIL import Image

|

| 348 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

| 349 |

+

import torch

|

| 350 |

+

from human_pose import HEDdetector

|

| 351 |

+

|

| 352 |

+

hed = HEDdetector.from_pretrained('lllyasviel/ControlNet')

|

| 353 |

+

|

| 354 |

+

image = Image.open('images/man.png')

|

| 355 |

+

|

| 356 |

+

image = hed(image)

|

| 357 |

+

|

| 358 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 359 |

+

"fusing/stable-diffusion-v1-5-controlnet-hed",

|

| 360 |

+

)

|

| 361 |

+

|

| 362 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 363 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None

|

| 364 |

+

)

|

| 365 |

+

pipe.to('cuda')

|

| 366 |

+

|

| 367 |

+

image = pipe("oil painting of handsome old man, masterpiece", image).images[0]

|

| 368 |

+

|

| 369 |

+

image.save('images/man_hed_out.png')

|

| 370 |

+

```

|

| 371 |

+

|

| 372 |

+

|

| 373 |

+

|

| 374 |

+

|

| 375 |

+

|

| 376 |

+

|

controlnet_utils.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

def ade_palette():

|

| 2 |

+

"""ADE20K palette that maps each class to RGB values."""

|

| 3 |

+

return [[120, 120, 120], [180, 120, 120], [6, 230, 230], [80, 50, 50],

|

| 4 |

+

[4, 200, 3], [120, 120, 80], [140, 140, 140], [204, 5, 255],

|

| 5 |

+

[230, 230, 230], [4, 250, 7], [224, 5, 255], [235, 255, 7],

|

| 6 |

+

[150, 5, 61], [120, 120, 70], [8, 255, 51], [255, 6, 82],

|

| 7 |

+

[143, 255, 140], [204, 255, 4], [255, 51, 7], [204, 70, 3],

|

| 8 |

+

[0, 102, 200], [61, 230, 250], [255, 6, 51], [11, 102, 255],

|

| 9 |

+

[255, 7, 71], [255, 9, 224], [9, 7, 230], [220, 220, 220],

|

| 10 |

+

[255, 9, 92], [112, 9, 255], [8, 255, 214], [7, 255, 224],

|

| 11 |

+

[255, 184, 6], [10, 255, 71], [255, 41, 10], [7, 255, 255],

|

| 12 |

+

[224, 255, 8], [102, 8, 255], [255, 61, 6], [255, 194, 7],

|

| 13 |

+

[255, 122, 8], [0, 255, 20], [255, 8, 41], [255, 5, 153],

|

| 14 |

+

[6, 51, 255], [235, 12, 255], [160, 150, 20], [0, 163, 255],

|

| 15 |

+

[140, 140, 140], [250, 10, 15], [20, 255, 0], [31, 255, 0],

|

| 16 |

+

[255, 31, 0], [255, 224, 0], [153, 255, 0], [0, 0, 255],

|

| 17 |

+

[255, 71, 0], [0, 235, 255], [0, 173, 255], [31, 0, 255],

|

| 18 |

+

[11, 200, 200], [255, 82, 0], [0, 255, 245], [0, 61, 255],

|

| 19 |

+

[0, 255, 112], [0, 255, 133], [255, 0, 0], [255, 163, 0],

|

| 20 |

+

[255, 102, 0], [194, 255, 0], [0, 143, 255], [51, 255, 0],

|

| 21 |

+

[0, 82, 255], [0, 255, 41], [0, 255, 173], [10, 0, 255],

|

| 22 |

+

[173, 255, 0], [0, 255, 153], [255, 92, 0], [255, 0, 255],

|

| 23 |

+

[255, 0, 245], [255, 0, 102], [255, 173, 0], [255, 0, 20],

|

| 24 |

+

[255, 184, 184], [0, 31, 255], [0, 255, 61], [0, 71, 255],

|

| 25 |

+

[255, 0, 204], [0, 255, 194], [0, 255, 82], [0, 10, 255],

|

| 26 |

+

[0, 112, 255], [51, 0, 255], [0, 194, 255], [0, 122, 255],

|

| 27 |

+

[0, 255, 163], [255, 153, 0], [0, 255, 10], [255, 112, 0],

|

| 28 |

+

[143, 255, 0], [82, 0, 255], [163, 255, 0], [255, 235, 0],

|

| 29 |

+

[8, 184, 170], [133, 0, 255], [0, 255, 92], [184, 0, 255],

|

| 30 |

+

[255, 0, 31], [0, 184, 255], [0, 214, 255], [255, 0, 112],

|

| 31 |

+

[92, 255, 0], [0, 224, 255], [112, 224, 255], [70, 184, 160],

|

| 32 |

+

[163, 0, 255], [153, 0, 255], [71, 255, 0], [255, 0, 163],

|

| 33 |

+

[255, 204, 0], [255, 0, 143], [0, 255, 235], [133, 255, 0],

|

| 34 |

+

[255, 0, 235], [245, 0, 255], [255, 0, 122], [255, 245, 0],

|

| 35 |

+

[10, 190, 212], [214, 255, 0], [0, 204, 255], [20, 0, 255],

|

| 36 |

+

[255, 255, 0], [0, 153, 255], [0, 41, 255], [0, 255, 204],

|

| 37 |

+

[41, 0, 255], [41, 255, 0], [173, 0, 255], [0, 245, 255],

|

| 38 |

+

[71, 0, 255], [122, 0, 255], [0, 255, 184], [0, 92, 255],

|

| 39 |

+

[184, 255, 0], [0, 133, 255], [255, 214, 0], [25, 194, 194],

|

| 40 |

+

[102, 255, 0], [92, 0, 255]]

|

images/bag.png

ADDED

|

images/bag_scribble.png

ADDED

|

images/bag_scribble_out.png

ADDED

|

images/bird.png

ADDED

|

Git LFS Details

|

images/bird_canny.png

ADDED

|

images/bird_canny_out.png

ADDED

|

images/chef_pose_out.png

ADDED

|

images/house.png

ADDED

|

images/house_seg.png

ADDED

|

images/house_seg_out.png

ADDED

|

images/man.png

ADDED

|

images/man_hed.png

ADDED

|

images/man_hed_out.png

ADDED

|

images/openpose.png

ADDED

|

images/pose.png

ADDED

|

images/room.png

ADDED

|

images/room_mlsd.png

ADDED

|

images/room_mlsd_out.png

ADDED

|

images/stormtrooper.png

ADDED

|

images/stormtrooper_depth.png

ADDED

|

images/stormtrooper_depth_out.png

ADDED

|

images/toy.png

ADDED

|

images/toy_normal.png

ADDED

|

images/toy_normal_out.png

ADDED

|